Introduction

Welcome to this first blog post!

Today, we will be speaking about an AI project I had with Thibault Gaillard, a friend of mine.

Being both interested in AI and overall Machine Learning, we usually dream big. But this time, we wanted to start easy with a Q-Learning AI playing Snake.

You can find our code on our GitHub repo

This blog’s purpose is to detail our choices and observations.

Enjoy !

Environment

The game itself is adapted from the code of kiteco

Snake moves on a grid of 10 per 10 squares by default. We wanted a tiny but interesting grid so that Snake would learn to eat apple and avoid walls quicker.

At the beginning of each game an apple is randomly put on the grid. We also chose to randomize the starting direction of the Snake to avoid any sort of pattern the AI could learn.

Cobra

Cobra is the name of our Deep Learning Q Agent.

Cobra is not aware of the real position of the current apple. It only knows if the apple is on its top, left, right or bottom. Same for any obstacle (wall or tail) except it does not need to know if any obstacle is at its bottom. This means everything is local to the head of the snake and its direction. Going left means going to its current left. It’s quite fun to play manually that way (see the repo to try).

Viper

Looking at our repo you can see the presence of an AI called Viper. This AI is “hardcoded” and is only there to test we are giving good information to Cobra. In fact, it consists of an AI going towards the apple and avoiding obstacles. It is very simple and always crashes on itself when it is too long. But that’s fine to test the state we are computing.

Rewards

We have shamely taken the reward system stated here

1

2

3

-100 when Cobra dies

10 if it eats an apple

1 or -1 if it goes or not toward the apple

Results

At this point we were happy of our snake learning how to play. But we really wanted to have nice learning parameters and could not find a reference with nice curves.

So we decided to make our statistics and observe how quickly Cobra would learn by changing parameters.

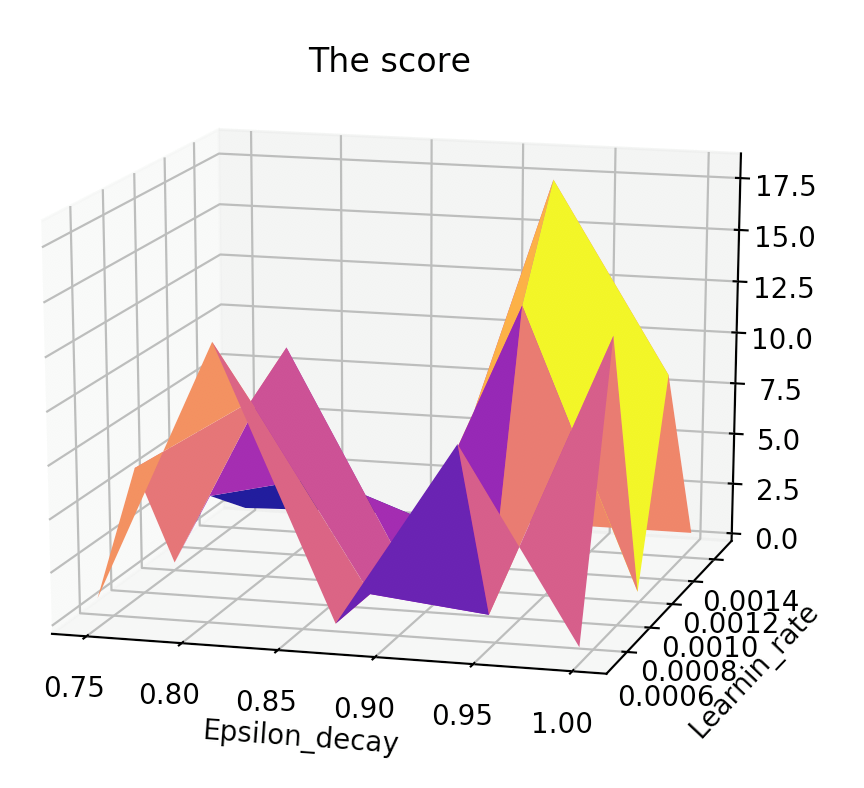

We chose to take a look at the learning rate, the epsilon decay factor and the batch size.

Unfortunately we did not have enough computation power to make those 3 parameters change together. Thus, we chose in the first place to change both the learning rate and the epsilon decay.

Here is the nice 3D curve we obtained :

We were happy to see a clear nice score for the following parameters :

1

2

Epsilon decay : 0.93675

Learning rate : 0.00125

Then, we computed the best batch size with those parameters and it happened to be 228.

Conclusion

We are very satisfied with the result of our Snake AI.

We encourage you to take a look at the repo if you are interested.